The process modeling community has a problem we need to talk about.

For years, we've refined BPMN into the universal language of business process management es. We've built elegant models that capture every human task, system interaction, and decision point. We have become experts at this. In fact, there are about 18 BPMN books (only counted originals, not redos for local language or 2nd and 3rd editions) if you do a search on Amazon. Try doing a search on BPMN and Agentic AI. There is nothing that combines the two.

But the fact is, AI agents are entering our workflows, and suddenly our notation feels like we're trying to draw up NFL plays using rules written for baseball. But perhaps it isn’t quite as stark as that. Maybe we just need to look a little deeper at the existing BPMN toolset.

So, here is the question we’re trying to answer. How do we model something that thinks? But not only thinks, since humans do that and we’ve modeled them into BPMN since the beginning, but thinks faster than anything we’ve ever interacted with (yes, we know there is an argument AI today doesn’t think; it is a valid argument; but, give us some license with the word think here).

The Challenge We're All Facing

Traditional BPMN works beautifully for deterministic processes. Task A leads to Task B. System validates data. Human approves request. We know what happens at each step because we designed it that way. This is not to say BPMN and deterministic process flows are perfect. They have their warts. But we are used to them for the world we’ve lived in, and have found them quite useful to represent the execution of our businesses processes.

Enter AI agents. They operate differently. They receive a request, evaluate it, decide which tools they need, execute them (often in parallel), then evaluate if they've solved the problem. If not? They replan and try again.

This isn't just a subprocess with extra steps. It's a fundamentally different pattern that challenges how we think about process modeling on the one hand, but on the other it is very modellable with current BPMN notation, as you will see shortly.

What We've Been Experimenting With

My colleagues (Eric Ducos and Cesar Prieto Jimenez ) and I have been deep in the trenches, analyzing how platforms like Kore, n8n, CrewAI, and others handle agent workflows. What emerged was fairly consistent: most agents are very focused in what their intention is (job to be done), and what tools they have available to them. In other words, the number of tools that are available to an agent are predefined the vast majority of the time. We did not see any patterns, at least for an enterprise deployment, where an agent is just let loose into the wild without any guidance (this seems to be something further out on the horizon). The typical approach seems to be, as Ian Gotts says, “treat the agent like a brilliant intern.” Give it plenty of direction and limit its scope.

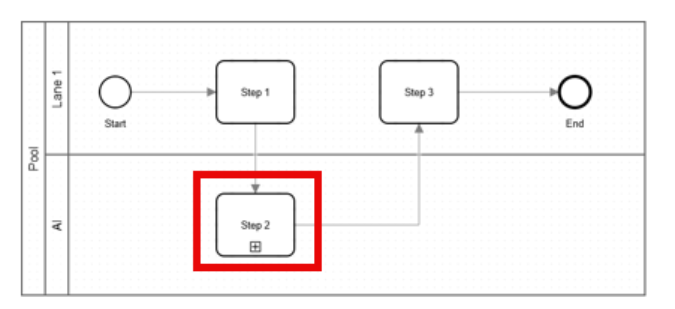

Given this, here's our current thinking: Model the agent as a subprocess, but with explicit stages that mirror how IA agents actually work today and for the foreseeable future.

Collapsed agent subprocess

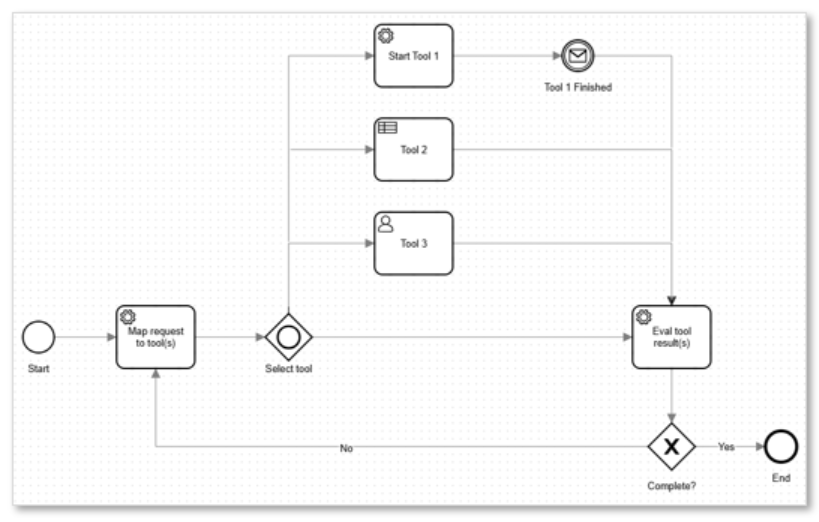

Within the subprocess, we have a step called Map request to tools(s). This is basically a planning task where the agent evaluates the request. The agent will figure out what tools are necessary for the request. The agent can invoke one tool, or many, it just depends on the request and what has been modeled.

Agent subprocess

As we have modeled it now, the tools will run in parallel. However, that is purely dependent on the job to be done and what it requires. Follow the tool execution with an evaluation task, and subsequent gateway, that determines if the goal was achieved or if another iteration is needed.

The beauty of this approach? It works within existing BPMN notation. No extensions needed. You can simulate it, execute it, and most importantly, understand it at a glance.

But Here's What We Don't Know

This pattern works, but is it the best approach? We don’t know because this is all fairly new. That's where we need your input.

Some open questions we're grappling with:

How do we handle agents that don't follow this pattern? The reactive agents that respond to events rather than as part of sequence might need a completely different approach. We have a potential solution for that as well, but that is a blog for a different day.

What about cost modeling? When an agent might make anywhere from one to fifty LLM calls, how do we estimate process costs? Traditional simulation assumes predictable resource consumption.

Should we extend BPMN notation? We could add specific symbols for agent tasks. But is that solving the problem or just adding complexity?

How do we represent tool selection? Agents choose tools at runtime based on the request. Do we model all possible tools in the diagram, or abstract this away?

How do we predict what tools the agent will use? In the past, this would be done by giving a percentage probability to each path after the “Select tool” inclusive gateway.

The Reusability Question

One intriguing possibility: creating a library of reusable agent patterns. Imagine having pre-built subprocesses for common agent behaviors - a "research agent" pattern, a "validation agent" pattern, a "creative agent" pattern. Teams could grab these patterns and just plug in their specific tools.

We can see a scenario where a company has a bunch of pre-built agents, and these agents form a library of subprocesses available to anyone at the company modeling a process. Over time, as business processes execute and use the agents, process practitioners would have data on path percentages, LLM costs, etc. Thus, making the models even more powerful and useable by simulation engines.

But this assumes we've found the right patterns. Have we?

Where We Go From Here

The intersection of AI agents and business processes isn't going away. If anything, it's accelerating. Organizations that figure out how to effectively model, govern, and optimize agent-enhanced processes will have a massive competitive advantage.

But this isn't something we can solve alone. We need the collective wisdom of the BPMN community.

So, here's my challenge to you: Take our the pattern we’ve proposed and tear it apart. Try modeling your own agent processes. What works? What breaks? What would you do differently?

Maybe you're already modeling agents in production. What patterns have emerged in your organization? What lessons have you learned?

Or perhaps you think we're approaching this all wrong. That's valuable too. Sometimes the best solutions come from completely rethinking the problem.

Let's Start a Conversation

The process modeling community has solved harder problems than this. We've created standards that work across industries, cultures, and technologies. We can figure this out too.

But it starts with admitting that our current models might be speaking the wrong language to AI agents. And it continues with all of us working together to develop a better vocabulary.

What's your take? How should we model AI agents in BPMN? What patterns are you seeing in your work?

Let's figure this out together. The future of process modeling might just depend on it.

Stop Guessing.

Start Knowing.

Every day you wait is another day that six-figure savings stay hidden in your processes. Take the first step.